Reproducing Key Figures from Kay et al. (2015) Paper¶

Introduction¶

This Jupyter Notebook demonstrates how one might use the NCAR Community Earth System Model (CESM) Large Ensemble (LENS) data hosted on AWS S3 (doi:10.26024/wt24-5j82). The notebook shows how to reproduce figures 2 and 4 from the Kay et al. (2015) paper describing the CESM LENS dataset (doi:10.1175/BAMS-D-13-00255.1)

This resource is intended to be helpful for people not familiar with elements of the Pangeo framework including Jupyter Notebooks, Xarray, and Zarr data format, or with the original paper, so it includes additional explanation.

Set up environment¶

[1]:

# Display output of plots directly in Notebook

%matplotlib inline

import warnings

warnings.filterwarnings("ignore")

import intake

import numpy as np

import pandas as pd

import xarray as xr

Create and Connect to Dask Distributed Cluster¶

[2]:

# Create cluster

from dask_gateway import Gateway

from dask.distributed import Client

gateway = Gateway()

cluster = gateway.new_cluster()

cluster.adapt(minimum=2, maximum=100)

# Connect to cluster

client = Client(cluster)

# Display cluster dashboard URL

cluster

☝️ Link to scheduler dashboard will appear above.

Load data into xarray from a catalog using intake-esm¶

[3]:

# Open collection description file

catalog_url = 'https://ncar-cesm-lens.s3-us-west-2.amazonaws.com/catalogs/aws-cesm1-le.json'

col = intake.open_esm_datastore(catalog_url)

col

aws-cesm1-le catalog with 56 dataset(s) from 409 asset(s):

| unique | |

|---|---|

| component | 5 |

| frequency | 6 |

| experiment | 4 |

| variable | 65 |

| path | 394 |

| variable_long_name | 62 |

| dim_per_tstep | 3 |

| start | 13 |

| end | 12 |

[4]:

# Show the first few lines of the catalog

col.df.head(10)

[4]:

| component | frequency | experiment | variable | path | variable_long_name | dim_per_tstep | start | end | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | atm | daily | 20C | FLNS | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-FLNS.... | net longwave flux at surface | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 1 | atm | daily | 20C | FLNSC | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-FLNSC... | clearsky net longwave flux at surface | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 2 | atm | daily | 20C | FLUT | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-FLUT.... | upwelling longwave flux at top of model | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 3 | atm | daily | 20C | FSNS | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-FSNS.... | net solar flux at surface | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 4 | atm | daily | 20C | FSNSC | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-FSNSC... | clearsky net solar flux at surface | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 5 | atm | daily | 20C | FSNTOA | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-FSNTO... | net solar flux at top of atmosphere | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 6 | atm | daily | 20C | ICEFRAC | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-ICEFR... | fraction of sfc area covered by sea-ice | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 7 | atm | daily | 20C | LHFLX | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-LHFLX... | surface latent heat flux | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 8 | atm | daily | 20C | PRECL | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-PRECL... | large-scale (stable) precipitation rate (liq +... | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 9 | atm | daily | 20C | PRECSC | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-PRECS... | convective snow rate (water equivalent) | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

[5]:

# Show expanded version of collection structure with details

import pprint

uniques = col.unique(columns=["component", "frequency", "experiment", "variable"])

pprint.pprint(uniques, compact=True, indent=4)

{ 'component': { 'count': 5,

'values': ['atm', 'ice_nh', 'ice_sh', 'lnd', 'ocn']},

'experiment': {'count': 4, 'values': ['20C', 'CTRL', 'HIST', 'RCP85']},

'frequency': { 'count': 6,

'values': [ 'daily', 'hourly6-1990-2005',

'hourly6-2026-2035', 'hourly6-2071-2080',

'monthly', 'static']},

'variable': { 'count': 65,

'values': [ 'DIC', 'DOC', 'FLNS', 'FLNSC', 'FLUT', 'FSNO',

'FSNS', 'FSNSC', 'FSNTOA', 'FW', 'H2OSNO',

'HMXL', 'ICEFRAC', 'LHFLX', 'O2', 'PD',

'PRECC', 'PRECL', 'PRECSC', 'PRECSL', 'PSL',

'Q', 'QFLUX', 'QRUNOFF', 'QSW_HBL', 'QSW_HTP',

'RAIN', 'RESID_S', 'SALT', 'SFWF',

'SFWF_WRST', 'SHF', 'SHFLX', 'SHF_QSW',

'SNOW', 'SOILLIQ', 'SOILWATER_10CM', 'SSH',

'SST', 'T', 'TAUX', 'TAUX2', 'TAUY', 'TAUY2',

'TEMP', 'TMQ', 'TREFHT', 'TREFHTMN',

'TREFHTMX', 'TS', 'U', 'UES', 'UET', 'UVEL',

'VNS', 'VNT', 'VVEL', 'WTS', 'WTT', 'WVEL',

'Z3', 'aice', 'aice_d', 'hi', 'hi_d']}}

Extract data needed to construct Figure 2 of Kay et al. paper¶

Search the catalog to find the desired data, in this case the reference height temperature of the atmosphere, at daily time resolution, for the Historical, 20th Century, and RCP8.5 (IPCC Representative Concentration Pathway 8.5) experiments

[6]:

col_subset = col.search(frequency=["daily", "monthly"], component="atm", variable="TREFHT",

experiment=["20C", "RCP85", "HIST"])

col_subset

aws-cesm1-le catalog with 6 dataset(s) from 6 asset(s):

| unique | |

|---|---|

| component | 1 |

| frequency | 2 |

| experiment | 3 |

| variable | 1 |

| path | 6 |

| variable_long_name | 1 |

| dim_per_tstep | 2 |

| start | 6 |

| end | 6 |

[7]:

col_subset.df

[7]:

| component | frequency | experiment | variable | path | variable_long_name | dim_per_tstep | start | end | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | atm | daily | 20C | TREFHT | s3://ncar-cesm-lens/atm/daily/cesmLE-20C-TREFH... | reference height temperature | 2.0 | 1920-01-01 12:00:00 | 2005-12-30 12:00:00 |

| 1 | atm | daily | HIST | TREFHT | s3://ncar-cesm-lens/atm/daily/cesmLE-HIST-TREF... | reference height temperature | 1.0 | 1850-01-01 00:00:00 | 1919-12-31 12:00:00 |

| 2 | atm | daily | RCP85 | TREFHT | s3://ncar-cesm-lens/atm/daily/cesmLE-RCP85-TRE... | reference height temperature | 2.0 | 2006-01-01 12:00:00 | 2100-12-31 12:00:00 |

| 3 | atm | monthly | 20C | TREFHT | s3://ncar-cesm-lens/atm/monthly/cesmLE-20C-TRE... | reference height temperature | 2.0 | 1920-01-16 12:00:00 | 2005-12-16 12:00:00 |

| 4 | atm | monthly | HIST | TREFHT | s3://ncar-cesm-lens/atm/monthly/cesmLE-HIST-TR... | reference height temperature | 1.0 | 1850-01-16 12:00:00 | 1919-12-16 12:00:00 |

| 5 | atm | monthly | RCP85 | TREFHT | s3://ncar-cesm-lens/atm/monthly/cesmLE-RCP85-T... | reference height temperature | 2.0 | 2006-01-16 12:00:00 | 2100-12-16 12:00:00 |

[8]:

# Load catalog entries for subset into a dictionary of xarray datasets

dsets = col_subset.to_dataset_dict(zarr_kwargs={"consolidated": True}, storage_options={"anon": True})

print(f"\nDataset dictionary keys:\n {dsets.keys()}")

--> The keys in the returned dictionary of datasets are constructed as follows:

'component.experiment.frequency'

Dataset dictionary keys:

dict_keys(['atm.RCP85.monthly', 'atm.20C.monthly', 'atm.HIST.daily', 'atm.RCP85.daily', 'atm.20C.daily', 'atm.HIST.monthly'])

[9]:

# Define Xarray datasets corresponding to the three experiments

ds_HIST = dsets['atm.HIST.monthly']

ds_20C = dsets['atm.20C.daily']

ds_RCP85 = dsets['atm.RCP85.daily']

[10]:

# Use Dask.Distributed utility function to display size of each dataset

from distributed.utils import format_bytes

print(f"Historical: {format_bytes(ds_HIST.nbytes)}\n"

f"20th Century: {format_bytes(ds_20C.nbytes)}\n"

f"RCP8.5: {format_bytes(ds_RCP85.nbytes)}")

Historical: 185.82 MB

20th Century: 277.71 GB

RCP8.5: 306.78 GB

[11]:

# Extract the Reference Height Temperature data variable

t_hist = ds_HIST["TREFHT"]

t_20c = ds_20C["TREFHT"]

t_rcp = ds_RCP85["TREFHT"]

t_20c

[11]:

<xarray.DataArray 'TREFHT' (member_id: 40, time: 31389, lat: 192, lon: 288)>

dask.array<zarr, shape=(40, 31389, 192, 288), dtype=float32, chunksize=(1, 576, 192, 288), chunktype=numpy.ndarray>

Coordinates:

* lat (lat) float64 -90.0 -89.06 -88.12 -87.17 ... 88.12 89.06 90.0

* lon (lon) float64 0.0 1.25 2.5 3.75 5.0 ... 355.0 356.2 357.5 358.8

* member_id (member_id) int64 1 2 3 4 5 6 7 8 ... 34 35 101 102 103 104 105

* time (time) object 1920-01-01 12:00:00 ... 2005-12-30 12:00:00

Attributes:

cell_methods: time: mean

long_name: Reference height temperature

units: K- member_id: 40

- time: 31389

- lat: 192

- lon: 288

- dask.array<chunksize=(1, 576, 192, 288), meta=np.ndarray>

Array Chunk Bytes 277.71 GB 127.40 MB Shape (40, 31389, 192, 288) (1, 576, 192, 288) Count 2201 Tasks 2200 Chunks Type float32 numpy.ndarray - lat(lat)float64-90.0 -89.06 -88.12 ... 89.06 90.0

- axis :

- Y

- bounds :

- lat_bnds

- long_name :

- latitude

- standard_name :

- latitude

- units :

- degrees_north

array([-90. , -89.057594, -88.115181, -87.172775, -86.23037 , -85.287956, -84.345551, -83.403145, -82.460732, -81.518326, -80.575912, -79.633507, -78.691101, -77.748688, -76.806282, -75.863876, -74.921463, -73.979057, -73.036652, -72.094238, -71.151833, -70.209427, -69.267014, -68.324608, -67.382202, -66.439789, -65.497383, -64.554977, -63.612564, -62.670158, -61.727749, -60.785339, -59.842934, -58.900524, -57.958115, -57.015705, -56.073299, -55.13089 , -54.18848 , -53.246075, -52.303665, -51.361256, -50.41885 , -49.47644 , -48.534031, -47.591621, -46.649216, -45.706806, -44.764397, -43.821991, -42.879581, -41.937172, -40.994766, -40.052357, -39.109947, -38.167538, -37.225132, -36.282722, -35.340313, -34.397907, -33.455498, -32.513088, -31.570681, -30.628273, -29.685863, -28.743456, -27.801046, -26.858639, -25.916231, -24.973822, -24.031414, -23.089005, -22.146597, -21.204189, -20.26178 , -19.319372, -18.376963, -17.434555, -16.492147, -15.549738, -14.607329, -13.664922, -12.722513, -11.780105, -10.837696, -9.895288, -8.95288 , -8.010471, -7.068063, -6.125654, -5.183246, -4.240838, -3.298429, -2.356021, -1.413613, -0.471204, 0.471204, 1.413613, 2.356021, 3.298429, 4.240838, 5.183246, 6.125654, 7.068063, 8.010471, 8.95288 , 9.895288, 10.837696, 11.780105, 12.722513, 13.664922, 14.607329, 15.549738, 16.492147, 17.434555, 18.376963, 19.319372, 20.26178 , 21.204189, 22.146597, 23.089005, 24.031414, 24.973822, 25.916231, 26.858639, 27.801046, 28.743456, 29.685863, 30.628273, 31.570681, 32.513088, 33.455498, 34.397907, 35.340313, 36.282722, 37.225132, 38.167538, 39.109947, 40.052357, 40.994766, 41.937172, 42.879581, 43.821991, 44.764397, 45.706806, 46.649216, 47.591621, 48.534031, 49.47644 , 50.41885 , 51.361256, 52.303665, 53.246075, 54.18848 , 55.13089 , 56.073299, 57.015705, 57.958115, 58.900524, 59.842934, 60.785339, 61.727749, 62.670158, 63.612564, 64.554977, 65.497383, 66.439789, 67.382202, 68.324608, 69.267014, 70.209427, 71.151833, 72.094238, 73.036652, 73.979057, 74.921463, 75.863876, 76.806282, 77.748688, 78.691101, 79.633507, 80.575912, 81.518326, 82.460732, 83.403145, 84.345551, 85.287956, 86.23037 , 87.172775, 88.115181, 89.057594, 90. ]) - lon(lon)float640.0 1.25 2.5 ... 356.2 357.5 358.8

- long_name :

- longitude

- units :

- degrees_east

array([ 0. , 1.25, 2.5 , ..., 356.25, 357.5 , 358.75])

- member_id(member_id)int641 2 3 4 5 6 ... 101 102 103 104 105

array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 101, 102, 103, 104, 105]) - time(time)object1920-01-01 12:00:00 ... 2005-12-...

- bounds :

- time_bnds

- long_name :

- time

array([cftime.DatetimeNoLeap(1920, 1, 1, 12, 0, 0, 0), cftime.DatetimeNoLeap(1920, 1, 2, 12, 0, 0, 0), cftime.DatetimeNoLeap(1920, 1, 3, 12, 0, 0, 0), ..., cftime.DatetimeNoLeap(2005, 12, 28, 12, 0, 0, 0), cftime.DatetimeNoLeap(2005, 12, 29, 12, 0, 0, 0), cftime.DatetimeNoLeap(2005, 12, 30, 12, 0, 0, 0)], dtype=object)

- cell_methods :

- time: mean

- long_name :

- Reference height temperature

- units :

- K

[12]:

# The global surface temperature anomaly was computed relative to the 1961-90 base period

# in the Kay et al. paper, so extract that time slice

t_ref = t_20c.sel(time=slice("1961", "1990"))

Read grid cell areas¶

Cell size varies with latitude, so this must be accounted for when computing the global mean.

[13]:

cat = col.search(frequency="static", component="atm", experiment=["20C"])

_, grid = cat.to_dataset_dict(aggregate=False, zarr_kwargs={"consolidated": True}).popitem()

grid

--> The keys in the returned dictionary of datasets are constructed as follows:

'component.frequency.experiment.variable.path.variable_long_name.dim_per_tstep.start.end'

[13]:

<xarray.Dataset>

Dimensions: (bnds: 2, ilev: 31, lat: 192, lev: 30, lon: 288, slat: 191, slon: 288)

Coordinates:

P0 float64 ...

area (lat, lon) float32 dask.array<chunksize=(192, 288), meta=np.ndarray>

gw (lat) float64 dask.array<chunksize=(192,), meta=np.ndarray>

hyai (ilev) float64 dask.array<chunksize=(31,), meta=np.ndarray>

hyam (lev) float64 dask.array<chunksize=(30,), meta=np.ndarray>

hybi (ilev) float64 dask.array<chunksize=(31,), meta=np.ndarray>

hybm (lev) float64 dask.array<chunksize=(30,), meta=np.ndarray>

* ilev (ilev) float64 2.255 5.032 10.16 18.56 ... 947.4 967.5 985.1 1e+03

* lat (lat) float64 -90.0 -89.06 -88.12 -87.17 ... 88.12 89.06 90.0

lat_bnds (lat, bnds) float64 dask.array<chunksize=(192, 2), meta=np.ndarray>

* lev (lev) float64 3.643 7.595 14.36 24.61 ... 936.2 957.5 976.3 992.6

* lon (lon) float64 0.0 1.25 2.5 3.75 5.0 ... 355.0 356.2 357.5 358.8

lon_bnds (lon, bnds) float64 dask.array<chunksize=(288, 2), meta=np.ndarray>

ntrk int32 ...

ntrm int32 ...

ntrn int32 ...

* slat (slat) float64 -89.53 -88.59 -87.64 -86.7 ... 87.64 88.59 89.53

* slon (slon) float64 -0.625 0.625 1.875 3.125 ... 355.6 356.9 358.1

w_stag (slat) float64 dask.array<chunksize=(191,), meta=np.ndarray>

wnummax (lat) int32 dask.array<chunksize=(192,), meta=np.ndarray>

Dimensions without coordinates: bnds

Data variables:

*empty*

Attributes:

intake_esm_dataset_key: atm.static.20C.nan.s3://ncar-cesm-lens/atm/stati...- bnds: 2

- ilev: 31

- lat: 192

- lev: 30

- lon: 288

- slat: 191

- slon: 288

- P0()float64...

- long_name :

- reference pressure

- units :

- Pa

array(100000.)

- area(lat, lon)float32dask.array<chunksize=(192, 288), meta=np.ndarray>

- long_name :

- Grid-Cell Area

- standard_name :

- cell_area

- units :

- m2

Array Chunk Bytes 221.18 kB 221.18 kB Shape (192, 288) (192, 288) Count 2 Tasks 1 Chunks Type float32 numpy.ndarray - gw(lat)float64dask.array<chunksize=(192,), meta=np.ndarray>

- long_name :

- gauss weights

Array Chunk Bytes 1.54 kB 1.54 kB Shape (192,) (192,) Count 2 Tasks 1 Chunks Type float64 numpy.ndarray - hyai(ilev)float64dask.array<chunksize=(31,), meta=np.ndarray>

- long_name :

- hybrid A coefficient at layer interfaces

Array Chunk Bytes 248 B 248 B Shape (31,) (31,) Count 2 Tasks 1 Chunks Type float64 numpy.ndarray - hyam(lev)float64dask.array<chunksize=(30,), meta=np.ndarray>

- long_name :

- hybrid A coefficient at layer midpoints

Array Chunk Bytes 240 B 240 B Shape (30,) (30,) Count 2 Tasks 1 Chunks Type float64 numpy.ndarray - hybi(ilev)float64dask.array<chunksize=(31,), meta=np.ndarray>

- long_name :

- hybrid B coefficient at layer interfaces

Array Chunk Bytes 248 B 248 B Shape (31,) (31,) Count 2 Tasks 1 Chunks Type float64 numpy.ndarray - hybm(lev)float64dask.array<chunksize=(30,), meta=np.ndarray>

- long_name :

- hybrid B coefficient at layer midpoints

Array Chunk Bytes 240 B 240 B Shape (30,) (30,) Count 2 Tasks 1 Chunks Type float64 numpy.ndarray - ilev(ilev)float642.255 5.032 10.16 ... 985.1 1e+03

- formula_terms :

- a: hyai b: hybi p0: P0 ps: PS

- long_name :

- hybrid level at interfaces (1000*(A+B))

- positive :

- down

- standard_name :

- atmosphere_hybrid_sigma_pressure_coordinate

- units :

- level

array([ 2.25524 , 5.031692, 10.157947, 18.555317, 30.669123, 45.867477, 63.323483, 80.701418, 94.941042, 111.693211, 131.401271, 154.586807, 181.863353, 213.952821, 251.704417, 296.117216, 348.366588, 409.835219, 482.149929, 567.224421, 652.332969, 730.445892, 796.363071, 845.353667, 873.715866, 900.324631, 924.964462, 947.432335, 967.538625, 985.11219 , 1000. ]) - lat(lat)float64-90.0 -89.06 -88.12 ... 89.06 90.0

- axis :

- Y

- bounds :

- lat_bnds

- long_name :

- latitude

- standard_name :

- latitude

- units :

- degrees_north

array([-90. , -89.057594, -88.115181, -87.172775, -86.23037 , -85.287956, -84.345551, -83.403145, -82.460732, -81.518326, -80.575912, -79.633507, -78.691101, -77.748688, -76.806282, -75.863876, -74.921463, -73.979057, -73.036652, -72.094238, -71.151833, -70.209427, -69.267014, -68.324608, -67.382202, -66.439789, -65.497383, -64.554977, -63.612564, -62.670158, -61.727749, -60.785339, -59.842934, -58.900524, -57.958115, -57.015705, -56.073299, -55.13089 , -54.18848 , -53.246075, -52.303665, -51.361256, -50.41885 , -49.47644 , -48.534031, -47.591621, -46.649216, -45.706806, -44.764397, -43.821991, -42.879581, -41.937172, -40.994766, -40.052357, -39.109947, -38.167538, -37.225132, -36.282722, -35.340313, -34.397907, -33.455498, -32.513088, -31.570681, -30.628273, -29.685863, -28.743456, -27.801046, -26.858639, -25.916231, -24.973822, -24.031414, -23.089005, -22.146597, -21.204189, -20.26178 , -19.319372, -18.376963, -17.434555, -16.492147, -15.549738, -14.607329, -13.664922, -12.722513, -11.780105, -10.837696, -9.895288, -8.95288 , -8.010471, -7.068063, -6.125654, -5.183246, -4.240838, -3.298429, -2.356021, -1.413613, -0.471204, 0.471204, 1.413613, 2.356021, 3.298429, 4.240838, 5.183246, 6.125654, 7.068063, 8.010471, 8.95288 , 9.895288, 10.837696, 11.780105, 12.722513, 13.664922, 14.607329, 15.549738, 16.492147, 17.434555, 18.376963, 19.319372, 20.26178 , 21.204189, 22.146597, 23.089005, 24.031414, 24.973822, 25.916231, 26.858639, 27.801046, 28.743456, 29.685863, 30.628273, 31.570681, 32.513088, 33.455498, 34.397907, 35.340313, 36.282722, 37.225132, 38.167538, 39.109947, 40.052357, 40.994766, 41.937172, 42.879581, 43.821991, 44.764397, 45.706806, 46.649216, 47.591621, 48.534031, 49.47644 , 50.41885 , 51.361256, 52.303665, 53.246075, 54.18848 , 55.13089 , 56.073299, 57.015705, 57.958115, 58.900524, 59.842934, 60.785339, 61.727749, 62.670158, 63.612564, 64.554977, 65.497383, 66.439789, 67.382202, 68.324608, 69.267014, 70.209427, 71.151833, 72.094238, 73.036652, 73.979057, 74.921463, 75.863876, 76.806282, 77.748688, 78.691101, 79.633507, 80.575912, 81.518326, 82.460732, 83.403145, 84.345551, 85.287956, 86.23037 , 87.172775, 88.115181, 89.057594, 90. ]) - lat_bnds(lat, bnds)float64dask.array<chunksize=(192, 2), meta=np.ndarray>

Array Chunk Bytes 3.07 kB 3.07 kB Shape (192, 2) (192, 2) Count 2 Tasks 1 Chunks Type float64 numpy.ndarray - lev(lev)float643.643 7.595 14.36 ... 976.3 992.6

- formula_terms :

- a: hyam b: hybm p0: P0 ps: PS

- long_name :

- hybrid level at midpoints (1000*(A+B))

- positive :

- down

- standard_name :

- atmosphere_hybrid_sigma_pressure_coordinate

- units :

- level

array([ 3.643466, 7.59482 , 14.356632, 24.61222 , 38.2683 , 54.59548 , 72.012451, 87.82123 , 103.317127, 121.547241, 142.994039, 168.22508 , 197.908087, 232.828619, 273.910817, 322.241902, 379.100904, 445.992574, 524.687175, 609.778695, 691.38943 , 763.404481, 820.858369, 859.534767, 887.020249, 912.644547, 936.198398, 957.48548 , 976.325407, 992.556095]) - lon(lon)float640.0 1.25 2.5 ... 356.2 357.5 358.8

- axis :

- X

- bounds :

- lon_bnds

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

array([ 0. , 1.25, 2.5 , ..., 356.25, 357.5 , 358.75])

- lon_bnds(lon, bnds)float64dask.array<chunksize=(288, 2), meta=np.ndarray>

Array Chunk Bytes 4.61 kB 4.61 kB Shape (288, 2) (288, 2) Count 2 Tasks 1 Chunks Type float64 numpy.ndarray - ntrk()int32...

- long_name :

- spectral truncation parameter K

array(1, dtype=int32)

- ntrm()int32...

- long_name :

- spectral truncation parameter M

array(1, dtype=int32)

- ntrn()int32...

- long_name :

- spectral truncation parameter N

array(1, dtype=int32)

- slat(slat)float64-89.53 -88.59 ... 88.59 89.53

- long_name :

- staggered latitude

- units :

- degrees_north

array([-89.528796, -88.586387, -87.643979, -86.701571, -85.759162, -84.816754, -83.874346, -82.931937, -81.989529, -81.04712 , -80.104712, -79.162304, -78.219895, -77.277487, -76.335079, -75.39267 , -74.450262, -73.507853, -72.565445, -71.623037, -70.680628, -69.73822 , -68.795812, -67.853403, -66.910995, -65.968586, -65.026178, -64.08377 , -63.141361, -62.198953, -61.256545, -60.314136, -59.371728, -58.429319, -57.486911, -56.544503, -55.602094, -54.659686, -53.717277, -52.774869, -51.832461, -50.890052, -49.947644, -49.005236, -48.062827, -47.120419, -46.17801 , -45.235602, -44.293194, -43.350785, -42.408377, -41.465969, -40.52356 , -39.581152, -38.638743, -37.696335, -36.753927, -35.811518, -34.86911 , -33.926702, -32.984293, -32.041885, -31.099476, -30.157068, -29.21466 , -28.272251, -27.329843, -26.387435, -25.445026, -24.502618, -23.560209, -22.617801, -21.675393, -20.732984, -19.790576, -18.848168, -17.905759, -16.963351, -16.020942, -15.078534, -14.136126, -13.193717, -12.251309, -11.308901, -10.366492, -9.424084, -8.481675, -7.539267, -6.596859, -5.65445 , -4.712042, -3.769634, -2.827225, -1.884817, -0.942408, 0. , 0.942408, 1.884817, 2.827225, 3.769634, 4.712042, 5.65445 , 6.596859, 7.539267, 8.481675, 9.424084, 10.366492, 11.308901, 12.251309, 13.193717, 14.136126, 15.078534, 16.020942, 16.963351, 17.905759, 18.848168, 19.790576, 20.732984, 21.675393, 22.617801, 23.560209, 24.502618, 25.445026, 26.387435, 27.329843, 28.272251, 29.21466 , 30.157068, 31.099476, 32.041885, 32.984293, 33.926702, 34.86911 , 35.811518, 36.753927, 37.696335, 38.638743, 39.581152, 40.52356 , 41.465969, 42.408377, 43.350785, 44.293194, 45.235602, 46.17801 , 47.120419, 48.062827, 49.005236, 49.947644, 50.890052, 51.832461, 52.774869, 53.717277, 54.659686, 55.602094, 56.544503, 57.486911, 58.429319, 59.371728, 60.314136, 61.256545, 62.198953, 63.141361, 64.08377 , 65.026178, 65.968586, 66.910995, 67.853403, 68.795812, 69.73822 , 70.680628, 71.623037, 72.565445, 73.507853, 74.450262, 75.39267 , 76.335079, 77.277487, 78.219895, 79.162304, 80.104712, 81.04712 , 81.989529, 82.931937, 83.874346, 84.816754, 85.759162, 86.701571, 87.643979, 88.586387, 89.528796]) - slon(slon)float64-0.625 0.625 1.875 ... 356.9 358.1

- long_name :

- staggered longitude

- units :

- degrees_east

array([ -0.625, 0.625, 1.875, ..., 355.625, 356.875, 358.125])

- w_stag(slat)float64dask.array<chunksize=(191,), meta=np.ndarray>

- long_name :

- staggered latitude weights

Array Chunk Bytes 1.53 kB 1.53 kB Shape (191,) (191,) Count 2 Tasks 1 Chunks Type float64 numpy.ndarray - wnummax(lat)int32dask.array<chunksize=(192,), meta=np.ndarray>

- long_name :

- cutoff Fourier wavenumber

Array Chunk Bytes 768 B 768 B Shape (192,) (192,) Count 2 Tasks 1 Chunks Type int32 numpy.ndarray

- intake_esm_dataset_key :

- atm.static.20C.nan.s3://ncar-cesm-lens/atm/static/grid.zarr.nan.nan.nan.nan

[14]:

cell_area = grid.area.load()

total_area = cell_area.sum()

cell_area

[14]:

<xarray.DataArray 'area' (lat: 192, lon: 288)>

array([[2.994837e+07, 2.994837e+07, 2.994837e+07, ..., 2.994837e+07,

2.994837e+07, 2.994837e+07],

[2.395748e+08, 2.395748e+08, 2.395748e+08, ..., 2.395748e+08,

2.395748e+08, 2.395748e+08],

[4.790848e+08, 4.790848e+08, 4.790848e+08, ..., 4.790848e+08,

4.790848e+08, 4.790848e+08],

...,

[4.790848e+08, 4.790848e+08, 4.790848e+08, ..., 4.790848e+08,

4.790848e+08, 4.790848e+08],

[2.395748e+08, 2.395748e+08, 2.395748e+08, ..., 2.395748e+08,

2.395748e+08, 2.395748e+08],

[2.994837e+07, 2.994837e+07, 2.994837e+07, ..., 2.994837e+07,

2.994837e+07, 2.994837e+07]], dtype=float32)

Coordinates:

P0 float64 1e+05

area (lat, lon) float32 29948370.0 29948370.0 ... 29948370.0 29948370.0

gw (lat) float64 3.382e-05 nan nan nan nan ... nan nan nan 3.382e-05

* lat (lat) float64 -90.0 -89.06 -88.12 -87.17 ... 87.17 88.12 89.06 90.0

* lon (lon) float64 0.0 1.25 2.5 3.75 5.0 ... 355.0 356.2 357.5 358.8

ntrk int32 1

ntrm int32 1

ntrn int32 1

wnummax (lat) int32 1 -2147483648 -2147483648 ... -2147483648 -2147483648 1

Attributes:

long_name: Grid-Cell Area

standard_name: cell_area

units: m2- lat: 192

- lon: 288

- 29948370.0 29948370.0 29948370.0 ... 29948370.0 29948370.0 29948370.0

array([[2.994837e+07, 2.994837e+07, 2.994837e+07, ..., 2.994837e+07, 2.994837e+07, 2.994837e+07], [2.395748e+08, 2.395748e+08, 2.395748e+08, ..., 2.395748e+08, 2.395748e+08, 2.395748e+08], [4.790848e+08, 4.790848e+08, 4.790848e+08, ..., 4.790848e+08, 4.790848e+08, 4.790848e+08], ..., [4.790848e+08, 4.790848e+08, 4.790848e+08, ..., 4.790848e+08, 4.790848e+08, 4.790848e+08], [2.395748e+08, 2.395748e+08, 2.395748e+08, ..., 2.395748e+08, 2.395748e+08, 2.395748e+08], [2.994837e+07, 2.994837e+07, 2.994837e+07, ..., 2.994837e+07, 2.994837e+07, 2.994837e+07]], dtype=float32) - P0()float641e+05

- long_name :

- reference pressure

- units :

- Pa

array(100000.)

- area(lat, lon)float3229948370.0 ... 29948370.0

- long_name :

- Grid-Cell Area

- standard_name :

- cell_area

- units :

- m2

array([[2.994837e+07, 2.994837e+07, 2.994837e+07, ..., 2.994837e+07, 2.994837e+07, 2.994837e+07], [2.395748e+08, 2.395748e+08, 2.395748e+08, ..., 2.395748e+08, 2.395748e+08, 2.395748e+08], [4.790848e+08, 4.790848e+08, 4.790848e+08, ..., 4.790848e+08, 4.790848e+08, 4.790848e+08], ..., [4.790848e+08, 4.790848e+08, 4.790848e+08, ..., 4.790848e+08, 4.790848e+08, 4.790848e+08], [2.395748e+08, 2.395748e+08, 2.395748e+08, ..., 2.395748e+08, 2.395748e+08, 2.395748e+08], [2.994837e+07, 2.994837e+07, 2.994837e+07, ..., 2.994837e+07, 2.994837e+07, 2.994837e+07]], dtype=float32) - gw(lat)float643.382e-05 nan nan ... nan 3.382e-05

- long_name :

- gauss weights

array([3.38174282e-05, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, ... nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 3.38174282e-05]) - lat(lat)float64-90.0 -89.06 -88.12 ... 89.06 90.0

- axis :

- Y

- bounds :

- lat_bnds

- long_name :

- latitude

- standard_name :

- latitude

- units :

- degrees_north

array([-90. , -89.057594, -88.115181, -87.172775, -86.23037 , -85.287956, -84.345551, -83.403145, -82.460732, -81.518326, -80.575912, -79.633507, -78.691101, -77.748688, -76.806282, -75.863876, -74.921463, -73.979057, -73.036652, -72.094238, -71.151833, -70.209427, -69.267014, -68.324608, -67.382202, -66.439789, -65.497383, -64.554977, -63.612564, -62.670158, -61.727749, -60.785339, -59.842934, -58.900524, -57.958115, -57.015705, -56.073299, -55.13089 , -54.18848 , -53.246075, -52.303665, -51.361256, -50.41885 , -49.47644 , -48.534031, -47.591621, -46.649216, -45.706806, -44.764397, -43.821991, -42.879581, -41.937172, -40.994766, -40.052357, -39.109947, -38.167538, -37.225132, -36.282722, -35.340313, -34.397907, -33.455498, -32.513088, -31.570681, -30.628273, -29.685863, -28.743456, -27.801046, -26.858639, -25.916231, -24.973822, -24.031414, -23.089005, -22.146597, -21.204189, -20.26178 , -19.319372, -18.376963, -17.434555, -16.492147, -15.549738, -14.607329, -13.664922, -12.722513, -11.780105, -10.837696, -9.895288, -8.95288 , -8.010471, -7.068063, -6.125654, -5.183246, -4.240838, -3.298429, -2.356021, -1.413613, -0.471204, 0.471204, 1.413613, 2.356021, 3.298429, 4.240838, 5.183246, 6.125654, 7.068063, 8.010471, 8.95288 , 9.895288, 10.837696, 11.780105, 12.722513, 13.664922, 14.607329, 15.549738, 16.492147, 17.434555, 18.376963, 19.319372, 20.26178 , 21.204189, 22.146597, 23.089005, 24.031414, 24.973822, 25.916231, 26.858639, 27.801046, 28.743456, 29.685863, 30.628273, 31.570681, 32.513088, 33.455498, 34.397907, 35.340313, 36.282722, 37.225132, 38.167538, 39.109947, 40.052357, 40.994766, 41.937172, 42.879581, 43.821991, 44.764397, 45.706806, 46.649216, 47.591621, 48.534031, 49.47644 , 50.41885 , 51.361256, 52.303665, 53.246075, 54.18848 , 55.13089 , 56.073299, 57.015705, 57.958115, 58.900524, 59.842934, 60.785339, 61.727749, 62.670158, 63.612564, 64.554977, 65.497383, 66.439789, 67.382202, 68.324608, 69.267014, 70.209427, 71.151833, 72.094238, 73.036652, 73.979057, 74.921463, 75.863876, 76.806282, 77.748688, 78.691101, 79.633507, 80.575912, 81.518326, 82.460732, 83.403145, 84.345551, 85.287956, 86.23037 , 87.172775, 88.115181, 89.057594, 90. ]) - lon(lon)float640.0 1.25 2.5 ... 356.2 357.5 358.8

- axis :

- X

- bounds :

- lon_bnds

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

array([ 0. , 1.25, 2.5 , ..., 356.25, 357.5 , 358.75])

- ntrk()int321

- long_name :

- spectral truncation parameter K

array(1, dtype=int32)

- ntrm()int321

- long_name :

- spectral truncation parameter M

array(1, dtype=int32)

- ntrn()int321

- long_name :

- spectral truncation parameter N

array(1, dtype=int32)

- wnummax(lat)int321 -2147483648 ... -2147483648 1

- long_name :

- cutoff Fourier wavenumber

array([ 1, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, -2147483648, 1], dtype=int32)

- long_name :

- Grid-Cell Area

- standard_name :

- cell_area

- units :

- m2

Define weighted means¶

Note: resample(time="AS") does an Annual resampling based on Start of calendar year.

See documentation for Pandas resampling options

[15]:

t_ref_ts = (

(t_ref.resample(time="AS").mean("time") * cell_area).sum(dim=("lat", "lon"))

/ total_area

).mean(dim=("time", "member_id"))

t_hist_ts = (

(t_hist.resample(time="AS").mean("time") * cell_area).sum(dim=("lat", "lon"))

) / total_area

t_20c_ts = (

(t_20c.resample(time="AS").mean("time") * cell_area).sum(dim=("lat", "lon"))

) / total_area

t_rcp_ts = (

(t_rcp.resample(time="AS").mean("time") * cell_area).sum(dim=("lat", "lon"))

) / total_area

Read data and compute means¶

Note: Dask’s “lazy execution” philosophy means that until this point we have not actually read the bulk of the data. These steps take a while to complete, so we include the Notebook “cell magic” directive %%time to display elapsed and CPU times after computation.

[16]:

%%time

t_ref_mean = t_ref_ts.load()

t_ref_mean

CPU times: user 4.22 s, sys: 84.4 ms, total: 4.31 s

Wall time: 56.6 s

[16]:

<xarray.DataArray ()>

array(286.38766, dtype=float32)

Coordinates:

P0 float64 1e+05

ntrk int32 1

ntrm int32 1

ntrn int32 1- 286.38766

array(286.38766, dtype=float32)

- P0()float641e+05

- long_name :

- reference pressure

- units :

- Pa

array(100000.)

- ntrk()int321

- long_name :

- spectral truncation parameter K

array(1, dtype=int32)

- ntrm()int321

- long_name :

- spectral truncation parameter M

array(1, dtype=int32)

- ntrn()int321

- long_name :

- spectral truncation parameter N

array(1, dtype=int32)

[17]:

%%time

t_hist_ts_df = t_hist_ts.to_series().T

t_hist_ts_df.head()

CPU times: user 227 ms, sys: 7.45 ms, total: 235 ms

Wall time: 2.47 s

[17]:

time

1850-01-01 00:00:00 286.205444

1851-01-01 00:00:00 286.273590

1852-01-01 00:00:00 286.248016

1853-01-01 00:00:00 286.240753

1854-01-01 00:00:00 286.150757

dtype: float32

[18]:

%%time

t_20c_ts_df = t_20c_ts.to_series().unstack().T

t_20c_ts_df.head()

distributed.client - WARNING - Couldn't gather 2 keys, rescheduling {"('truediv-b953cf0d57c0846bc584c86a2f3a3dcb', 20, 83)": (), "('truediv-b953cf0d57c0846bc584c86a2f3a3dcb', 6, 11)": ()}

CPU times: user 13 s, sys: 457 ms, total: 13.4 s

Wall time: 2min 17s

[18]:

| member_id | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ... | 31 | 32 | 33 | 34 | 35 | 101 | 102 | 103 | 104 | 105 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| time | |||||||||||||||||||||

| 1920-01-01 00:00:00 | 286.311676 | 286.346863 | 286.283936 | 286.365051 | 286.328400 | 286.374512 | 286.387177 | 286.302338 | 286.376007 | 286.348663 | ... | 286.243713 | 286.283813 | 286.174377 | 286.309479 | 286.297028 | 286.342316 | 286.342346 | 286.378021 | 286.322083 | 286.255188 |

| 1921-01-01 00:00:00 | 286.249603 | 286.198578 | 286.287506 | 286.390289 | 286.309784 | 286.334747 | 286.309937 | 286.300873 | 286.314240 | 286.306244 | ... | 286.178558 | 286.316467 | 286.073853 | 286.296082 | 286.317352 | 286.374298 | 286.246185 | 286.355591 | 286.493042 | 286.224823 |

| 1922-01-01 00:00:00 | 286.293854 | 286.296844 | 286.265747 | 286.336914 | 286.293915 | 286.218872 | 286.010254 | 286.195770 | 286.205841 | 286.396942 | ... | 286.143463 | 286.316132 | 286.140900 | 286.294434 | 286.327911 | 286.142883 | 286.413239 | 286.368683 | 286.502197 | 286.281860 |

| 1923-01-01 00:00:00 | 286.327942 | 286.321838 | 286.251160 | 286.322327 | 286.236725 | 286.151855 | 286.066864 | 286.203583 | 286.271698 | 286.291260 | ... | 286.168610 | 286.300781 | 286.096191 | 286.115479 | 286.227142 | 286.226837 | 286.512238 | 286.381165 | 286.215485 | 286.396912 |

| 1924-01-01 00:00:00 | 286.308380 | 286.238281 | 286.147858 | 286.311798 | 286.362732 | 286.185822 | 286.249084 | 286.288116 | 286.330627 | 286.411957 | ... | 286.142395 | 286.287109 | 286.233795 | 286.201202 | 286.253906 | 286.323425 | 286.255035 | 286.221741 | 286.247589 | 286.421387 |

5 rows × 40 columns

[19]:

%%time

t_rcp_ts_df = t_rcp_ts.to_series().unstack().T

t_rcp_ts_df.head()

CPU times: user 13.9 s, sys: 299 ms, total: 14.2 s

Wall time: 1min 18s

[19]:

| member_id | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ... | 31 | 32 | 33 | 34 | 35 | 101 | 102 | 103 | 104 | 105 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| time | |||||||||||||||||||||

| 2006-01-01 00:00:00 | 286.764832 | 286.960358 | 286.679230 | 286.793152 | 286.754547 | 287.022339 | 286.850464 | 287.089844 | 286.960022 | 286.775787 | ... | 286.866089 | 286.925049 | 286.663971 | 286.955414 | 286.712524 | 287.115601 | 286.863556 | 286.881683 | 287.308411 | 287.030334 |

| 2007-01-01 00:00:00 | 287.073792 | 286.908539 | 286.808746 | 286.998901 | 286.841675 | 286.993042 | 286.914124 | 286.938965 | 286.933563 | 286.675385 | ... | 286.804108 | 286.849548 | 286.628204 | 287.010529 | 286.811554 | 287.187225 | 286.862823 | 287.008270 | 287.222534 | 287.239044 |

| 2008-01-01 00:00:00 | 287.104095 | 286.815033 | 286.995056 | 287.081543 | 287.100708 | 286.960510 | 286.854706 | 286.878937 | 287.062927 | 286.702454 | ... | 286.825653 | 286.844086 | 286.811859 | 286.803741 | 286.956635 | 287.080994 | 286.930084 | 286.945801 | 287.087128 | 287.157745 |

| 2009-01-01 00:00:00 | 286.984497 | 287.059448 | 287.010498 | 287.144714 | 286.948700 | 287.092316 | 286.888458 | 287.050934 | 287.138428 | 286.890839 | ... | 286.785797 | 286.876556 | 286.953094 | 287.060364 | 287.056946 | 287.124908 | 287.005615 | 287.083984 | 287.254211 | 287.060730 |

| 2010-01-01 00:00:00 | 286.991821 | 287.102295 | 286.988129 | 286.875183 | 286.954407 | 287.121796 | 286.938843 | 287.116211 | 286.957245 | 287.049622 | ... | 286.937317 | 286.928314 | 286.980499 | 287.118713 | 287.178040 | 287.030212 | 287.114716 | 287.083038 | 287.256927 | 287.066528 |

5 rows × 40 columns

Get observations for figure 2 (HadCRUT4; Morice et al. 2012)¶

[20]:

# Observational time series data for comparison with ensemble average

obsDataURL = "https://www.esrl.noaa.gov/psd/thredds/dodsC/Datasets/cru/hadcrut4/air.mon.anom.median.nc"

[21]:

ds = xr.open_dataset(obsDataURL).load()

ds

[21]:

<xarray.Dataset>

Dimensions: (lat: 36, lon: 72, nbnds: 2, time: 2046)

Coordinates:

* lat (lat) float32 87.5 82.5 77.5 72.5 ... -72.5 -77.5 -82.5 -87.5

* lon (lon) float32 -177.5 -172.5 -167.5 -162.5 ... 167.5 172.5 177.5

* time (time) datetime64[ns] 1850-01-01 1850-02-01 ... 2020-06-01

Dimensions without coordinates: nbnds

Data variables:

time_bnds (time, nbnds) datetime64[ns] 1850-01-01 1850-01-31 ... 2020-06-30

air (time, lat, lon) float32 nan nan nan nan nan ... nan nan nan nan

Attributes:

platform: Surface

title: HADCRUT4 Combined Air Temperature/SST An...

history: Originally created at NOAA/ESRL PSD by C...

Conventions: CF-1.0

Comment: This dataset supersedes V3

Source: Obtained from http://hadobs.metoffice.co...

version: 4.2.0

dataset_title: HadCRUT4

References: https://www.psl.noaa.gov/data/gridded/da...

DODS_EXTRA.Unlimited_Dimension: time- lat: 36

- lon: 72

- nbnds: 2

- time: 2046

- lat(lat)float3287.5 82.5 77.5 ... -82.5 -87.5

- long_name :

- Latitude

- units :

- degrees_north

- actual_range :

- [ 87.5 -87.5]

- axis :

- Y

- coordinate_defines :

- point

- standard_name :

- latitude

- _ChunkSizes :

- 36

array([ 87.5, 82.5, 77.5, 72.5, 67.5, 62.5, 57.5, 52.5, 47.5, 42.5, 37.5, 32.5, 27.5, 22.5, 17.5, 12.5, 7.5, 2.5, -2.5, -7.5, -12.5, -17.5, -22.5, -27.5, -32.5, -37.5, -42.5, -47.5, -52.5, -57.5, -62.5, -67.5, -72.5, -77.5, -82.5, -87.5], dtype=float32) - lon(lon)float32-177.5 -172.5 ... 172.5 177.5

- long_name :

- Longitude

- units :

- degrees_east

- actual_range :

- [-177.5 177.5]

- axis :

- X

- coordinate_defines :

- point

- standard_name :

- longitude

- _ChunkSizes :

- 72

array([-177.5, -172.5, -167.5, -162.5, -157.5, -152.5, -147.5, -142.5, -137.5, -132.5, -127.5, -122.5, -117.5, -112.5, -107.5, -102.5, -97.5, -92.5, -87.5, -82.5, -77.5, -72.5, -67.5, -62.5, -57.5, -52.5, -47.5, -42.5, -37.5, -32.5, -27.5, -22.5, -17.5, -12.5, -7.5, -2.5, 2.5, 7.5, 12.5, 17.5, 22.5, 27.5, 32.5, 37.5, 42.5, 47.5, 52.5, 57.5, 62.5, 67.5, 72.5, 77.5, 82.5, 87.5, 92.5, 97.5, 102.5, 107.5, 112.5, 117.5, 122.5, 127.5, 132.5, 137.5, 142.5, 147.5, 152.5, 157.5, 162.5, 167.5, 172.5, 177.5], dtype=float32) - time(time)datetime64[ns]1850-01-01 ... 2020-06-01

- long_name :

- Time

- delta_t :

- 0000-01-00 00:00:00

- avg_period :

- 0000-01-00 00:00:00

- standard_name :

- time

- axis :

- T

- coordinate_defines :

- start

- bounds :

- time_bnds

- actual_range :

- [18262. 80505.]

- _ChunkSizes :

- 1

array(['1850-01-01T00:00:00.000000000', '1850-02-01T00:00:00.000000000', '1850-03-01T00:00:00.000000000', ..., '2020-04-01T00:00:00.000000000', '2020-05-01T00:00:00.000000000', '2020-06-01T00:00:00.000000000'], dtype='datetime64[ns]')

- time_bnds(time, nbnds)datetime64[ns]1850-01-01 ... 2020-06-30

- long_name :

- Time Boundaries

- _ChunkSizes :

- [1 2]

array([['1850-01-01T00:00:00.000000000', '1850-01-31T00:00:00.000000000'], ['1850-02-01T00:00:00.000000000', '1850-02-28T00:00:00.000000000'], ['1850-03-01T00:00:00.000000000', '1850-03-31T00:00:00.000000000'], ..., ['2020-04-01T00:00:00.000000000', '2020-04-30T00:00:00.000000000'], ['2020-05-01T00:00:00.000000000', '2020-05-31T00:00:00.000000000'], ['2020-06-01T00:00:00.000000000', '2020-06-30T00:00:00.000000000']], dtype='datetime64[ns]') - air(time, lat, lon)float32nan nan nan nan ... nan nan nan nan

- dataset :

- HADCRUT4

- var_desc :

- Air Temperature

- level_desc :

- Surface

- statistic :

- Anomaly

- parent_stat :

- Observation

- valid_range :

- [-40. 40.]

- units :

- degC

- long_name :

- HADCRUT4: Median Surface Air Temperature Monthly Median Anomaly from 100 ensemble members

- precision :

- 2

- cell_methods :

- time: anomaly (monthly from values)

- standard_name :

- air_temperature_anomaly

- actual_range :

- [-20.846687 20.620308]

- _ChunkSizes :

- [ 1 36 72]

array([[[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ... [ nan, nan, nan, ..., 0.97774607, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [0.5561051 , 1.9100599 , nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]]], dtype=float32)

- platform :

- Surface

- title :

- HADCRUT4 Combined Air Temperature/SST Anomaly

- history :

- Originally created at NOAA/ESRL PSD by CAS 04/2012 from files obtained at the Hadley Center

- Conventions :

- CF-1.0

- Comment :

- This dataset supersedes V3

- Source :

- Obtained from http://hadobs.metoffice.com/ Data is a collaboration of CRU and the Hadley Center

- version :

- 4.2.0

- dataset_title :

- HadCRUT4

- References :

- https://www.psl.noaa.gov/data/gridded/data.hadcrut4.html

- DODS_EXTRA.Unlimited_Dimension :

- time

[22]:

def weighted_temporal_mean(ds):

"""

weight by days in each month

"""

time_bound_diff = ds.time_bnds.diff(dim="nbnds")[:, 0]

wgts = time_bound_diff.groupby("time.year") / time_bound_diff.groupby(

"time.year"

).sum(xr.ALL_DIMS)

np.testing.assert_allclose(wgts.groupby("time.year").sum(xr.ALL_DIMS), 1.0)

obs = ds["air"]

cond = obs.isnull()

ones = xr.where(cond, 0.0, 1.0)

obs_sum = (obs * wgts).resample(time="AS").sum(dim="time")

ones_out = (ones * wgts).resample(time="AS").sum(dim="time")

obs_s = (obs_sum / ones_out).mean(("lat", "lon")).to_series()

return obs_s

Limit Observations to 20th Century

[23]:

obs_s = weighted_temporal_mean(ds)

obs_s = obs_s['1920':]

obs_s.head()

[23]:

time

1920-01-01 -0.262006

1921-01-01 -0.195891

1922-01-01 -0.301986

1923-01-01 -0.269062

1924-01-01 -0.292857

Freq: AS-JAN, dtype: float64

[24]:

all_ts_anom = pd.concat([t_20c_ts_df, t_rcp_ts_df]) - t_ref_mean.data

years = [val.year for val in all_ts_anom.index]

obs_years = [val.year for val in obs_s.index]

[25]:

# Combine ensemble member 1 data from historical and 20th century experiments

hist_anom = t_hist_ts_df - t_ref_mean.data

member1 = pd.concat([hist_anom.iloc[:-2], all_ts_anom.iloc[:,0]], verify_integrity=True)

member1_years = [val.year for val in member1.index]

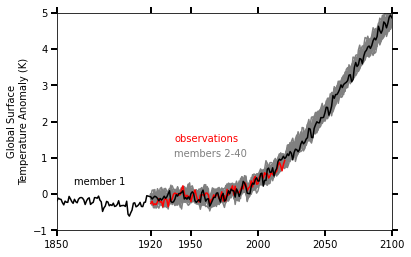

Figure 2: Global surface temperature anomaly (1961-90 base period) for individual ensemble members, and observations¶

[26]:

import matplotlib.pyplot as plt

[27]:

ax = plt.axes()

ax.tick_params(right=True, top=True, direction="out", length=6, width=2, grid_alpha=0.5)

ax.plot(years, all_ts_anom.iloc[:,1:], color="grey")

ax.plot(obs_years, obs_s['1920':], color="red")

ax.plot(member1_years, member1, color="black")

ax.text(

0.35,

0.4,

"observations",

verticalalignment="bottom",

horizontalalignment="left",

transform=ax.transAxes,

color="red",

fontsize=10,

)

ax.text(

0.35,

0.33,

"members 2-40",

verticalalignment="bottom",

horizontalalignment="left",

transform=ax.transAxes,

color="grey",

fontsize=10,

)

ax.text(

0.05,

0.2,

"member 1",

verticalalignment="bottom",

horizontalalignment="left",

transform=ax.transAxes,

color="black",

fontsize=10,

)

ax.set_xticks([1850, 1920, 1950, 2000, 2050, 2100])

plt.ylim(-1, 5)

plt.xlim(1850, 2100)

plt.ylabel("Global Surface\nTemperature Anomaly (K)")

plt.show()

Figure will appear above when ready. Compare with Fig.2 of Kay et al. 2015 (doi:10.1175/BAMS-D-13-00255.1)

Compute linear trend for winter seasons¶

[28]:

def linear_trend(da, dim="time"):

da_chunk = da.chunk({dim: -1})

trend = xr.apply_ufunc(

calc_slope,

da_chunk,

vectorize=True,

input_core_dims=[[dim]],

output_core_dims=[[]],

output_dtypes=[np.float],

dask="parallelized",

)

return trend

def calc_slope(y):

"""ufunc to be used by linear_trend"""

x = np.arange(len(y))

# drop missing values (NaNs) from x and y

finite_indexes = ~np.isnan(y)

slope = np.nan if (np.sum(finite_indexes) < 2) else np.polyfit(x[finite_indexes], y[finite_indexes], 1)[0]

return slope

Compute ensemble trends¶

[29]:

t = xr.concat([t_20c, t_rcp], dim="time")

seasons = t.sel(time=slice("1979", "2012")).resample(time="QS-DEC").mean("time")

# Include only full seasons from 1979 and 2012

seasons = seasons.sel(time=slice("1979", "2012")).load()

[30]:

winter_seasons = seasons.sel(

time=seasons.time.where(seasons.time.dt.month == 12, drop=True)

)

winter_trends = linear_trend(

winter_seasons.chunk({"lat": 20, "lon": 20, "time": -1})

).load() * len(winter_seasons.time)

# Compute ensemble mean from the first 30 members

winter_trends_mean = winter_trends.isel(member_id=range(30)).mean(dim='member_id')

[31]:

# Make sure that we have 34 seasons

assert len(winter_seasons.time) == 34

Get Observations for Figure 4 (NASA GISS GisTemp)¶

[32]:

# Observational time series data for comparison with ensemble average

# NASA GISS Surface Temperature Analysis, https://data.giss.nasa.gov/gistemp/

obsDataURL = "https://data.giss.nasa.gov/pub/gistemp/gistemp1200_GHCNv4_ERSSTv5.nc.gz"

[33]:

# Download, unzip, and load file

import os

os.system("wget " + obsDataURL)

obsDataFileName = obsDataURL.split('/')[-1]

os.system("gunzip " + obsDataFileName)

obsDataFileName = obsDataFileName[:-3]

ds = xr.open_dataset(obsDataFileName).load()

ds

[33]:

<xarray.Dataset>

Dimensions: (lat: 90, lon: 180, nv: 2, time: 1687)

Coordinates:

* lat (lat) float32 -89.0 -87.0 -85.0 -83.0 ... 83.0 85.0 87.0 89.0

* lon (lon) float32 -179.0 -177.0 -175.0 -173.0 ... 175.0 177.0 179.0

* time (time) datetime64[ns] 1880-01-15 1880-02-15 ... 2020-07-15

Dimensions without coordinates: nv

Data variables:

time_bnds (time, nv) datetime64[ns] 1880-01-01 1880-02-01 ... 2020-08-01

tempanomaly (time, lat, lon) float32 nan nan nan nan ... 0.55 0.55 0.55

Attributes:

title: GISTEMP Surface Temperature Analysis

institution: NASA Goddard Institute for Space Studies

source: http://data.giss.nasa.gov/gistemp/

Conventions: CF-1.6

history: Created 2020-08-13 09:26:17 by SBBX_to_nc 2.0 - ILAND=1200,...- lat: 90

- lon: 180

- nv: 2

- time: 1687

- lat(lat)float32-89.0 -87.0 -85.0 ... 87.0 89.0

- standard_name :

- latitude

- long_name :

- Latitude

- units :

- degrees_north

array([-89., -87., -85., -83., -81., -79., -77., -75., -73., -71., -69., -67., -65., -63., -61., -59., -57., -55., -53., -51., -49., -47., -45., -43., -41., -39., -37., -35., -33., -31., -29., -27., -25., -23., -21., -19., -17., -15., -13., -11., -9., -7., -5., -3., -1., 1., 3., 5., 7., 9., 11., 13., 15., 17., 19., 21., 23., 25., 27., 29., 31., 33., 35., 37., 39., 41., 43., 45., 47., 49., 51., 53., 55., 57., 59., 61., 63., 65., 67., 69., 71., 73., 75., 77., 79., 81., 83., 85., 87., 89.], dtype=float32) - lon(lon)float32-179.0 -177.0 ... 177.0 179.0

- standard_name :

- longitude

- long_name :

- Longitude

- units :

- degrees_east

array([-179., -177., -175., -173., -171., -169., -167., -165., -163., -161., -159., -157., -155., -153., -151., -149., -147., -145., -143., -141., -139., -137., -135., -133., -131., -129., -127., -125., -123., -121., -119., -117., -115., -113., -111., -109., -107., -105., -103., -101., -99., -97., -95., -93., -91., -89., -87., -85., -83., -81., -79., -77., -75., -73., -71., -69., -67., -65., -63., -61., -59., -57., -55., -53., -51., -49., -47., -45., -43., -41., -39., -37., -35., -33., -31., -29., -27., -25., -23., -21., -19., -17., -15., -13., -11., -9., -7., -5., -3., -1., 1., 3., 5., 7., 9., 11., 13., 15., 17., 19., 21., 23., 25., 27., 29., 31., 33., 35., 37., 39., 41., 43., 45., 47., 49., 51., 53., 55., 57., 59., 61., 63., 65., 67., 69., 71., 73., 75., 77., 79., 81., 83., 85., 87., 89., 91., 93., 95., 97., 99., 101., 103., 105., 107., 109., 111., 113., 115., 117., 119., 121., 123., 125., 127., 129., 131., 133., 135., 137., 139., 141., 143., 145., 147., 149., 151., 153., 155., 157., 159., 161., 163., 165., 167., 169., 171., 173., 175., 177., 179.], dtype=float32) - time(time)datetime64[ns]1880-01-15 ... 2020-07-15

- long_name :

- time

- bounds :

- time_bnds

array(['1880-01-15T00:00:00.000000000', '1880-02-15T00:00:00.000000000', '1880-03-15T00:00:00.000000000', ..., '2020-05-15T00:00:00.000000000', '2020-06-15T00:00:00.000000000', '2020-07-15T00:00:00.000000000'], dtype='datetime64[ns]')

- time_bnds(time, nv)datetime64[ns]1880-01-01 ... 2020-08-01

array([['1880-01-01T00:00:00.000000000', '1880-02-01T00:00:00.000000000'], ['1880-02-01T00:00:00.000000000', '1880-03-01T00:00:00.000000000'], ['1880-03-01T00:00:00.000000000', '1880-04-01T00:00:00.000000000'], ..., ['2020-05-01T00:00:00.000000000', '2020-06-01T00:00:00.000000000'], ['2020-06-01T00:00:00.000000000', '2020-07-01T00:00:00.000000000'], ['2020-07-01T00:00:00.000000000', '2020-08-01T00:00:00.000000000']], dtype='datetime64[ns]') - tempanomaly(time, lat, lon)float32nan nan nan nan ... 0.55 0.55 0.55

- long_name :

- Surface temperature anomaly

- units :

- K

- cell_methods :

- time: mean

array([[[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [3.51, 3.51, 3.51, ..., 3.51, 3.51, 3.51], [3.51, 3.51, 3.51, ..., 3.51, 3.51, 3.51], [3.51, 3.51, 3.51, ..., 3.51, 3.51, 3.51]], [[1.73, 1.73, 1.73, ..., 1.73, 1.73, 1.73], [1.73, 1.73, 1.73, ..., 1.73, 1.73, 1.73], [1.73, 1.73, 1.73, ..., 1.73, 1.73, 1.73], ..., [2.04, 2.04, 2.04, ..., 2.04, 2.04, 2.04], [2.04, 2.04, 2.04, ..., 2.04, 2.04, 2.04], [2.04, 2.04, 2.04, ..., 2.04, 2.04, 2.04]], [[0.07, 0.07, 0.07, ..., 0.07, 0.07, 0.07], [0.07, 0.07, 0.07, ..., 0.07, 0.07, 0.07], [0.07, 0.07, 0.07, ..., 0.07, 0.07, 0.07], ..., [0.55, 0.55, 0.55, ..., 0.55, 0.55, 0.55], [0.55, 0.55, 0.55, ..., 0.55, 0.55, 0.55], [0.55, 0.55, 0.55, ..., 0.55, 0.55, 0.55]]], dtype=float32)

- title :

- GISTEMP Surface Temperature Analysis

- institution :

- NASA Goddard Institute for Space Studies

- source :

- http://data.giss.nasa.gov/gistemp/

- Conventions :

- CF-1.6

- history :

- Created 2020-08-13 09:26:17 by SBBX_to_nc 2.0 - ILAND=1200, IOCEAN=NCDC/ER5, Base: 1951-1980

[34]:

# Remap longitude range from [-180, 180] to [0, 360] for plotting purposes

ds = ds.assign_coords(lon=((ds.lon + 360) % 360)).sortby('lon')

ds

[34]:

<xarray.Dataset>

Dimensions: (lat: 90, lon: 180, nv: 2, time: 1687)

Coordinates:

* lat (lat) float32 -89.0 -87.0 -85.0 -83.0 ... 83.0 85.0 87.0 89.0

* lon (lon) float32 1.0 3.0 5.0 7.0 9.0 ... 353.0 355.0 357.0 359.0

* time (time) datetime64[ns] 1880-01-15 1880-02-15 ... 2020-07-15

Dimensions without coordinates: nv

Data variables:

time_bnds (time, nv) datetime64[ns] 1880-01-01 1880-02-01 ... 2020-08-01

tempanomaly (time, lat, lon) float32 nan nan nan nan ... 0.55 0.55 0.55

Attributes:

title: GISTEMP Surface Temperature Analysis

institution: NASA Goddard Institute for Space Studies

source: http://data.giss.nasa.gov/gistemp/

Conventions: CF-1.6

history: Created 2020-08-13 09:26:17 by SBBX_to_nc 2.0 - ILAND=1200,...- lat: 90

- lon: 180

- nv: 2

- time: 1687

- lat(lat)float32-89.0 -87.0 -85.0 ... 87.0 89.0

- standard_name :

- latitude

- long_name :

- Latitude

- units :

- degrees_north

array([-89., -87., -85., -83., -81., -79., -77., -75., -73., -71., -69., -67., -65., -63., -61., -59., -57., -55., -53., -51., -49., -47., -45., -43., -41., -39., -37., -35., -33., -31., -29., -27., -25., -23., -21., -19., -17., -15., -13., -11., -9., -7., -5., -3., -1., 1., 3., 5., 7., 9., 11., 13., 15., 17., 19., 21., 23., 25., 27., 29., 31., 33., 35., 37., 39., 41., 43., 45., 47., 49., 51., 53., 55., 57., 59., 61., 63., 65., 67., 69., 71., 73., 75., 77., 79., 81., 83., 85., 87., 89.], dtype=float32) - lon(lon)float321.0 3.0 5.0 ... 355.0 357.0 359.0

array([ 1., 3., 5., 7., 9., 11., 13., 15., 17., 19., 21., 23., 25., 27., 29., 31., 33., 35., 37., 39., 41., 43., 45., 47., 49., 51., 53., 55., 57., 59., 61., 63., 65., 67., 69., 71., 73., 75., 77., 79., 81., 83., 85., 87., 89., 91., 93., 95., 97., 99., 101., 103., 105., 107., 109., 111., 113., 115., 117., 119., 121., 123., 125., 127., 129., 131., 133., 135., 137., 139., 141., 143., 145., 147., 149., 151., 153., 155., 157., 159., 161., 163., 165., 167., 169., 171., 173., 175., 177., 179., 181., 183., 185., 187., 189., 191., 193., 195., 197., 199., 201., 203., 205., 207., 209., 211., 213., 215., 217., 219., 221., 223., 225., 227., 229., 231., 233., 235., 237., 239., 241., 243., 245., 247., 249., 251., 253., 255., 257., 259., 261., 263., 265., 267., 269., 271., 273., 275., 277., 279., 281., 283., 285., 287., 289., 291., 293., 295., 297., 299., 301., 303., 305., 307., 309., 311., 313., 315., 317., 319., 321., 323., 325., 327., 329., 331., 333., 335., 337., 339., 341., 343., 345., 347., 349., 351., 353., 355., 357., 359.], dtype=float32) - time(time)datetime64[ns]1880-01-15 ... 2020-07-15

- long_name :

- time

- bounds :

- time_bnds

array(['1880-01-15T00:00:00.000000000', '1880-02-15T00:00:00.000000000', '1880-03-15T00:00:00.000000000', ..., '2020-05-15T00:00:00.000000000', '2020-06-15T00:00:00.000000000', '2020-07-15T00:00:00.000000000'], dtype='datetime64[ns]')

- time_bnds(time, nv)datetime64[ns]1880-01-01 ... 2020-08-01

array([['1880-01-01T00:00:00.000000000', '1880-02-01T00:00:00.000000000'], ['1880-02-01T00:00:00.000000000', '1880-03-01T00:00:00.000000000'], ['1880-03-01T00:00:00.000000000', '1880-04-01T00:00:00.000000000'], ..., ['2020-05-01T00:00:00.000000000', '2020-06-01T00:00:00.000000000'], ['2020-06-01T00:00:00.000000000', '2020-07-01T00:00:00.000000000'], ['2020-07-01T00:00:00.000000000', '2020-08-01T00:00:00.000000000']], dtype='datetime64[ns]') - tempanomaly(time, lat, lon)float32nan nan nan nan ... 0.55 0.55 0.55

- long_name :

- Surface temperature anomaly

- units :

- K

- cell_methods :

- time: mean

array([[[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan]], [[ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], [ nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [3.51, 3.51, 3.51, ..., 3.51, 3.51, 3.51], [3.51, 3.51, 3.51, ..., 3.51, 3.51, 3.51], [3.51, 3.51, 3.51, ..., 3.51, 3.51, 3.51]], [[1.73, 1.73, 1.73, ..., 1.73, 1.73, 1.73], [1.73, 1.73, 1.73, ..., 1.73, 1.73, 1.73], [1.73, 1.73, 1.73, ..., 1.73, 1.73, 1.73], ..., [2.04, 2.04, 2.04, ..., 2.04, 2.04, 2.04], [2.04, 2.04, 2.04, ..., 2.04, 2.04, 2.04], [2.04, 2.04, 2.04, ..., 2.04, 2.04, 2.04]], [[0.07, 0.07, 0.07, ..., 0.07, 0.07, 0.07], [0.07, 0.07, 0.07, ..., 0.07, 0.07, 0.07], [0.07, 0.07, 0.07, ..., 0.07, 0.07, 0.07], ..., [0.55, 0.55, 0.55, ..., 0.55, 0.55, 0.55], [0.55, 0.55, 0.55, ..., 0.55, 0.55, 0.55], [0.55, 0.55, 0.55, ..., 0.55, 0.55, 0.55]]], dtype=float32)

- title :

- GISTEMP Surface Temperature Analysis

- institution :

- NASA Goddard Institute for Space Studies

- source :

- http://data.giss.nasa.gov/gistemp/

- Conventions :

- CF-1.6

- history :

- Created 2020-08-13 09:26:17 by SBBX_to_nc 2.0 - ILAND=1200, IOCEAN=NCDC/ER5, Base: 1951-1980

Compute observed trends¶

[35]:

obs_seasons = ds.sel(time=slice("1979", "2012")).resample(time="QS-DEC").mean("time")

# Include only full seasons from 1979 through 2012

obs_seasons = obs_seasons.sel(time=slice("1979", "2012")).load()

# Compute observed winter trends

obs_winter_seasons = obs_seasons.sel(

time=obs_seasons.time.where(obs_seasons.time.dt.month == 12, drop=True)

)

obs_winter_seasons

[35]:

<xarray.Dataset>

Dimensions: (lat: 90, lon: 180, time: 34)

Coordinates:

* time (time) datetime64[ns] 1979-12-01 1980-12-01 ... 2012-12-01

* lon (lon) float32 1.0 3.0 5.0 7.0 9.0 ... 353.0 355.0 357.0 359.0

* lat (lat) float32 -89.0 -87.0 -85.0 -83.0 ... 83.0 85.0 87.0 89.0

Data variables:

tempanomaly (time, lat, lon) float32 0.5333333 0.5333333 ... 7.47 7.47- lat: 90

- lon: 180

- time: 34

- time(time)datetime64[ns]1979-12-01 ... 2012-12-01

array(['1979-12-01T00:00:00.000000000', '1980-12-01T00:00:00.000000000', '1981-12-01T00:00:00.000000000', '1982-12-01T00:00:00.000000000', '1983-12-01T00:00:00.000000000', '1984-12-01T00:00:00.000000000', '1985-12-01T00:00:00.000000000', '1986-12-01T00:00:00.000000000', '1987-12-01T00:00:00.000000000', '1988-12-01T00:00:00.000000000', '1989-12-01T00:00:00.000000000', '1990-12-01T00:00:00.000000000', '1991-12-01T00:00:00.000000000', '1992-12-01T00:00:00.000000000', '1993-12-01T00:00:00.000000000', '1994-12-01T00:00:00.000000000', '1995-12-01T00:00:00.000000000', '1996-12-01T00:00:00.000000000', '1997-12-01T00:00:00.000000000', '1998-12-01T00:00:00.000000000', '1999-12-01T00:00:00.000000000', '2000-12-01T00:00:00.000000000', '2001-12-01T00:00:00.000000000', '2002-12-01T00:00:00.000000000', '2003-12-01T00:00:00.000000000', '2004-12-01T00:00:00.000000000', '2005-12-01T00:00:00.000000000', '2006-12-01T00:00:00.000000000', '2007-12-01T00:00:00.000000000', '2008-12-01T00:00:00.000000000', '2009-12-01T00:00:00.000000000', '2010-12-01T00:00:00.000000000', '2011-12-01T00:00:00.000000000', '2012-12-01T00:00:00.000000000'], dtype='datetime64[ns]') - lon(lon)float321.0 3.0 5.0 ... 355.0 357.0 359.0

array([ 1., 3., 5., 7., 9., 11., 13., 15., 17., 19., 21., 23., 25., 27., 29., 31., 33., 35., 37., 39., 41., 43., 45., 47., 49., 51., 53., 55., 57., 59., 61., 63., 65., 67., 69., 71., 73., 75., 77., 79., 81., 83., 85., 87., 89., 91., 93., 95., 97., 99., 101., 103., 105., 107., 109., 111., 113., 115., 117., 119., 121., 123., 125., 127., 129., 131., 133., 135., 137., 139., 141., 143., 145., 147., 149., 151., 153., 155., 157., 159., 161., 163., 165., 167., 169., 171., 173., 175., 177., 179., 181., 183., 185., 187., 189., 191., 193., 195., 197., 199., 201., 203., 205., 207., 209., 211., 213., 215., 217., 219., 221., 223., 225., 227., 229., 231., 233., 235., 237., 239., 241., 243., 245., 247., 249., 251., 253., 255., 257., 259., 261., 263., 265., 267., 269., 271., 273., 275., 277., 279., 281., 283., 285., 287., 289., 291., 293., 295., 297., 299., 301., 303., 305., 307., 309., 311., 313., 315., 317., 319., 321., 323., 325., 327., 329., 331., 333., 335., 337., 339., 341., 343., 345., 347., 349., 351., 353., 355., 357., 359.], dtype=float32) - lat(lat)float32-89.0 -87.0 -85.0 ... 87.0 89.0

- standard_name :

- latitude

- long_name :

- Latitude

- units :

- degrees_north

array([-89., -87., -85., -83., -81., -79., -77., -75., -73., -71., -69., -67., -65., -63., -61., -59., -57., -55., -53., -51., -49., -47., -45., -43., -41., -39., -37., -35., -33., -31., -29., -27., -25., -23., -21., -19., -17., -15., -13., -11., -9., -7., -5., -3., -1., 1., 3., 5., 7., 9., 11., 13., 15., 17., 19., 21., 23., 25., 27., 29., 31., 33., 35., 37., 39., 41., 43., 45., 47., 49., 51., 53., 55., 57., 59., 61., 63., 65., 67., 69., 71., 73., 75., 77., 79., 81., 83., 85., 87., 89.], dtype=float32)

- tempanomaly(time, lat, lon)float320.5333333 0.5333333 ... 7.47 7.47

array([[[ 0.5333333 , 0.5333333 , 0.5333333 , ..., 0.5333333 , 0.5333333 , 0.5333333 ], [ 0.5333333 , 0.5333333 , 0.5333333 , ..., 0.5333333 , 0.5333333 , 0.5333333 ], [ 0.5333333 , 0.5333333 , 0.5333333 , ..., 0.5333333 , 0.5333333 , 0.5333333 ], ..., [ 0.26333335, 0.26333335, 0.26333335, ..., 0.26333335, 0.26333335, 0.26333335], [ 0.26333335, 0.26333335, 0.26333335, ..., 0.26333335, 0.26333335, 0.26333335], [ 0.26333335, 0.26333335, 0.26333335, ..., 0.26333335, 0.26333335, 0.26333335]], [[ 2.533333 , 2.533333 , 2.533333 , ..., 2.533333 , 2.533333 , 2.533333 ], [ 2.533333 , 2.533333 , 2.533333 , ..., 2.533333 , 2.533333 , 2.533333 ], [ 2.533333 , 2.533333 , 2.533333 , ..., 2.533333 , 2.533333 , 2.533333 ], ... [ 6.636667 , 6.636667 , 6.636667 , ..., 6.636667 , 6.636667 , 6.636667 ], [ 6.636667 , 6.636667 , 6.636667 , ..., 6.636667 , 6.636667 , 6.636667 ], [ 6.636667 , 6.636667 , 6.636667 , ..., 6.636667 , 6.636667 , 6.636667 ]], [[ 3.1999998 , 3.1999998 , 3.1999998 , ..., 3.1999998 , 3.1999998 , 3.1999998 ], [ 3.1999998 , 3.1999998 , 3.1999998 , ..., 3.1999998 , 3.1999998 , 3.1999998 ], [ 3.1999998 , 3.1999998 , 3.1999998 , ..., 3.1999998 , 3.1999998 , 3.1999998 ], ..., [ 7.47 , 7.47 , 7.47 , ..., 7.47 , 7.47 , 7.47 ], [ 7.47 , 7.47 , 7.47 , ..., 7.47 , 7.47 , 7.47 ], [ 7.47 , 7.47 , 7.47 , ..., 7.47 , 7.47 , 7.47 ]]], dtype=float32)

[36]:

obs_winter_trends = linear_trend(

obs_winter_seasons.chunk({"lat": 20, "lon": 20, "time": -1})

).load() * len(obs_winter_seasons.time)

obs_winter_trends

[36]:

<xarray.Dataset>

Dimensions: (lat: 90, lon: 180)

Coordinates:

* lon (lon) float32 1.0 3.0 5.0 7.0 9.0 ... 353.0 355.0 357.0 359.0

* lat (lat) float32 -89.0 -87.0 -85.0 -83.0 ... 83.0 85.0 87.0 89.0

Data variables:

tempanomaly (lat, lon) float64 1.284 1.284 1.284 ... 5.672 5.672 5.672- lat: 90

- lon: 180

- lon(lon)float321.0 3.0 5.0 ... 355.0 357.0 359.0

array([ 1., 3., 5., 7., 9., 11., 13., 15., 17., 19., 21., 23., 25., 27., 29., 31., 33., 35., 37., 39., 41., 43., 45., 47., 49., 51., 53., 55., 57., 59., 61., 63., 65., 67., 69., 71., 73., 75., 77., 79., 81., 83., 85., 87., 89., 91., 93., 95., 97., 99., 101., 103., 105., 107., 109., 111., 113., 115., 117., 119., 121., 123., 125., 127., 129., 131., 133., 135., 137., 139., 141., 143., 145., 147., 149., 151., 153., 155., 157., 159., 161., 163., 165., 167., 169., 171., 173., 175., 177., 179., 181., 183., 185., 187., 189., 191., 193., 195., 197., 199., 201., 203., 205., 207., 209., 211., 213., 215., 217., 219., 221., 223., 225., 227., 229., 231., 233., 235., 237., 239., 241., 243., 245., 247., 249., 251., 253., 255., 257., 259., 261., 263., 265., 267., 269., 271., 273., 275., 277., 279., 281., 283., 285., 287., 289., 291., 293., 295., 297., 299., 301., 303., 305., 307., 309., 311., 313., 315., 317., 319., 321., 323., 325., 327., 329., 331., 333., 335., 337., 339., 341., 343., 345., 347., 349., 351., 353., 355., 357., 359.], dtype=float32) - lat(lat)float32-89.0 -87.0 -85.0 ... 87.0 89.0

- standard_name :

- latitude

- long_name :

- Latitude

- units :

- degrees_north

array([-89., -87., -85., -83., -81., -79., -77., -75., -73., -71., -69., -67., -65., -63., -61., -59., -57., -55., -53., -51., -49., -47., -45., -43., -41., -39., -37., -35., -33., -31., -29., -27., -25., -23., -21., -19., -17., -15., -13., -11., -9., -7., -5., -3., -1., 1., 3., 5., 7., 9., 11., 13., 15., 17., 19., 21., 23., 25., 27., 29., 31., 33., 35., 37., 39., 41., 43., 45., 47., 49., 51., 53., 55., 57., 59., 61., 63., 65., 67., 69., 71., 73., 75., 77., 79., 81., 83., 85., 87., 89.], dtype=float32)

- tempanomaly(lat, lon)float641.284 1.284 1.284 ... 5.672 5.672

array([[1.2841905 , 1.2841905 , 1.2841905 , ..., 1.2841905 , 1.2841905 , 1.2841905 ], [1.2841905 , 1.2841905 , 1.2841905 , ..., 1.2841905 , 1.2841905 , 1.2841905 ], [1.2841905 , 1.2841905 , 1.2841905 , ..., 1.2841905 , 1.2841905 , 1.2841905 ], ..., [5.67154961, 5.67154961, 5.67154961, ..., 5.67154961, 5.67154961, 5.67154961], [5.67154961, 5.67154961, 5.67154961, ..., 5.67154961, 5.67154961, 5.67154961], [5.67154961, 5.67154961, 5.67154961, ..., 5.67154961, 5.67154961, 5.67154961]])

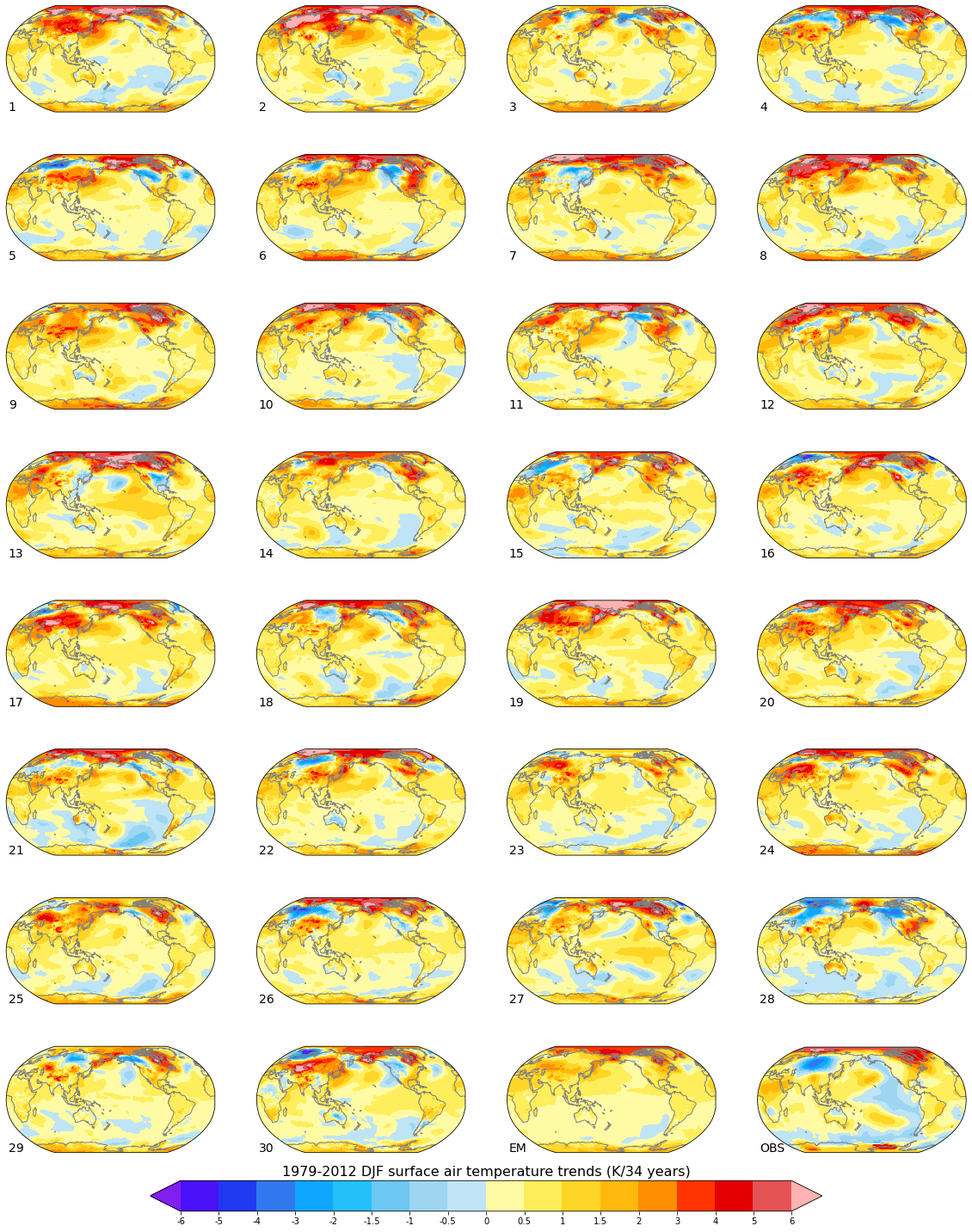

Figure 4: Global maps of historical (1979 - 2012) boreal winter (DJF) surface air trends¶

[37]:

import cmaps # for NCL colormaps

import cartopy.crs as ccrs

import matplotlib.pyplot as plt

contour_levels = [-6, -5, -4, -3, -2, -1.5, -1, -0.5, 0, 0.5, 1, 1.5, 2, 3, 4, 5, 6]

color_map = cmaps.ncl_default

[38]:

def make_map_plot(nplot_rows, nplot_cols, plot_index, data, plot_label):

""" Create a single map subplot. """

ax = plt.subplot(nplot_rows, nplot_cols, plot_index, projection = ccrs.Robinson(central_longitude = 180))

cplot = plt.contourf(lons, lats, data,

levels = contour_levels,

cmap = color_map,

extend = 'both',

transform = ccrs.PlateCarree())

ax.coastlines(color = 'grey')

ax.text(0.01, 0.01, plot_label, fontSize = 14, transform = ax.transAxes)

return cplot, ax

[39]:

# Generate plot (may take a while as many individual maps are generated)

numPlotRows = 8

numPlotCols = 4

figWidth = 20

figHeight = 30

fig, axs = plt.subplots(numPlotRows, numPlotCols, figsize=(figWidth,figHeight))

lats = winter_trends.lat

lons = winter_trends.lon

# Create ensemble member plots

for ensemble_index in range(30):

plot_data = winter_trends.isel(member_id = ensemble_index)

plot_index = ensemble_index + 1

plot_label = str(plot_index)

plotRow = ensemble_index // numPlotCols

plotCol = ensemble_index % numPlotCols

# Retain axes objects for figure colorbar

cplot, axs[plotRow, plotCol] = make_map_plot(numPlotRows, numPlotCols, plot_index, plot_data, plot_label)

# Create plots for the ensemble mean, observations, and a figure color bar.

cplot, axs[7,2] = make_map_plot(numPlotRows, numPlotCols, 31, winter_trends_mean, 'EM')

lats = obs_winter_trends.lat

lons = obs_winter_trends.lon

cplot, axs[7,3] = make_map_plot(numPlotRows, numPlotCols, 32, obs_winter_trends.tempanomaly, 'OBS')

cbar = fig.colorbar(cplot, ax=axs, orientation='horizontal', shrink = 0.7, pad = 0.02)

cbar.ax.set_title('1979-2012 DJF surface air temperature trends (K/34 years)', fontSize = 16)

cbar.set_ticks(contour_levels)

cbar.set_ticklabels(contour_levels)

Figure will appear above when ready. Compare with Fig. 4 of Kay et al. 2015 (doi:10.1175/BAMS-D-13-00255.1).

[40]:

# Gracefully destroy/close our cluster

client.close()

cluster.close()